In virtual reality (VR) systems, we often talk about “immersion” as a primary goal for user experience. From the perspective of the optical designer, an immersive VR headset will produce a field of view (FOV) that rivals a person’s natural field of vision. In practice, we strike a balance between our 200° binocular visual field and a FOV that is feasible with existing display and eyepiece technologies. Most VR headsets produce a full FOV in the range of 80-110 degrees.

Even with this compromise, producing an eyepiece that projects this wide FOV is far from trivial. A 90° FOV eyepiece can be as big as a coffee mug—just see the photo below! These refractive lenses comprise 7-10 lens elements and can easily weigh a couple of pounds.

Most commercial VR headsets sidestep this problem by placing a large (3-4”) LCD or AMOLED panel close to the eye, relying on the eyepiece to project the display to a comfortable virtual distance. The apparent FOV is largely achieved via the display’s size and proximity to the eye.

Recent advances in OLED and microLED display technology have sparked renewed interest in incorporating smaller displays into VR systems. There are several advantages that these so-called direct view displays have over LCDs and AMOLED panels:

- Higher luminance

- Higher contrast ratio

- Wider viewing angle

- Smaller electronics package size

- Smaller pixels

It’s tempting to expect that a microdisplay will result in corresponding “micro-optics.” This is not the case as these advantages shift the burden of FOV from the display to the eyepiece. Now the eyepiece must not only project the display to a comfortable virtual distance, but do so with high magnification to expand the small (typically 1” diagonal or less) microdisplay to a large virtual field. Because higher magnification requires higher optical power (i.e. shorter focal lengths), more complicated eyepieces are required.

Take for instance, a 1” microdisplay and a 100° FOV eyepiece following a fisheye (fθ) distortion profile. The corresponding focal length of the eyepiece is 14.5mm. This short focal length is nearly equal to the eye relief distance needed for user comfort. Optical engineers know this is a significant lens design challenge, and it’s one of the reasons for the large size of equivalent telescope eyepieces.

Etendue, or equivalently lens speed, presents a second challenge for this 14.5mm eyepiece. VR systems require a certain amount of eye box—the area in which the user’s eye pupil can be positioned. The larger the eye box, the more comfortable and immersive the user experience (imagine using a microscope where the image turns black when you move your head—a larger eye box reduces this effect). A 14.5mm focal length and 10mm eye box represents an f/1.45 lens speed, compared to the approximate f/4 apertures of large AMOLED-based headsets. This effectively requires collecting light over a wider viewing angle of the display (i.e. a higher etendue system). Fortunately, direct view microdisplays are much better than LCDs and AMOLEDs at supporting these wider viewing angles. However, this causes even more headaches for the lens designer who is challenged with corralling all of these rays into a high-quality image.

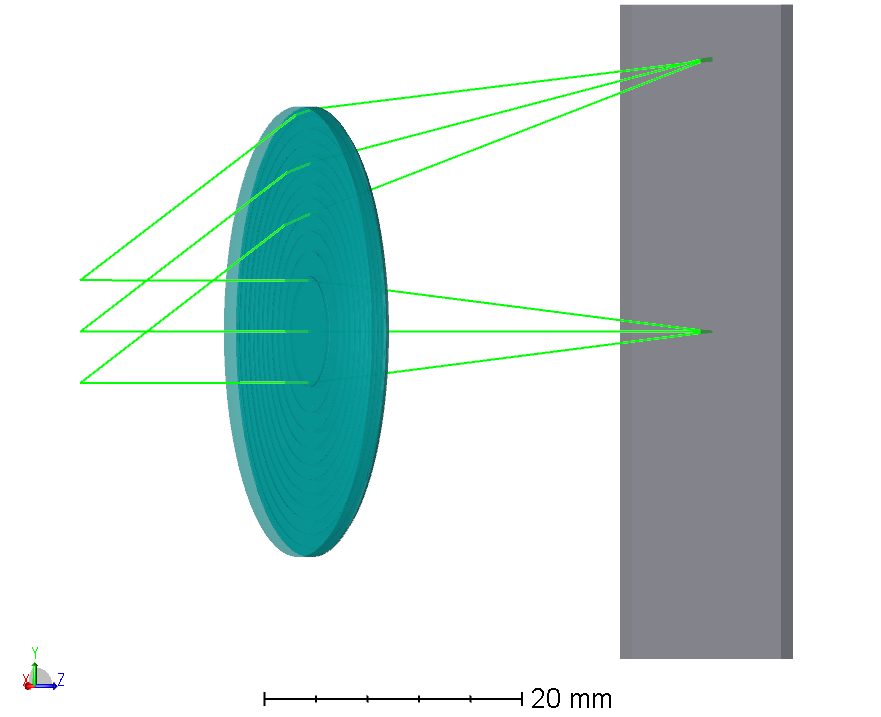

Fig. 1: Raytrace for a Fresnel eyepiece and large AMOLED display.

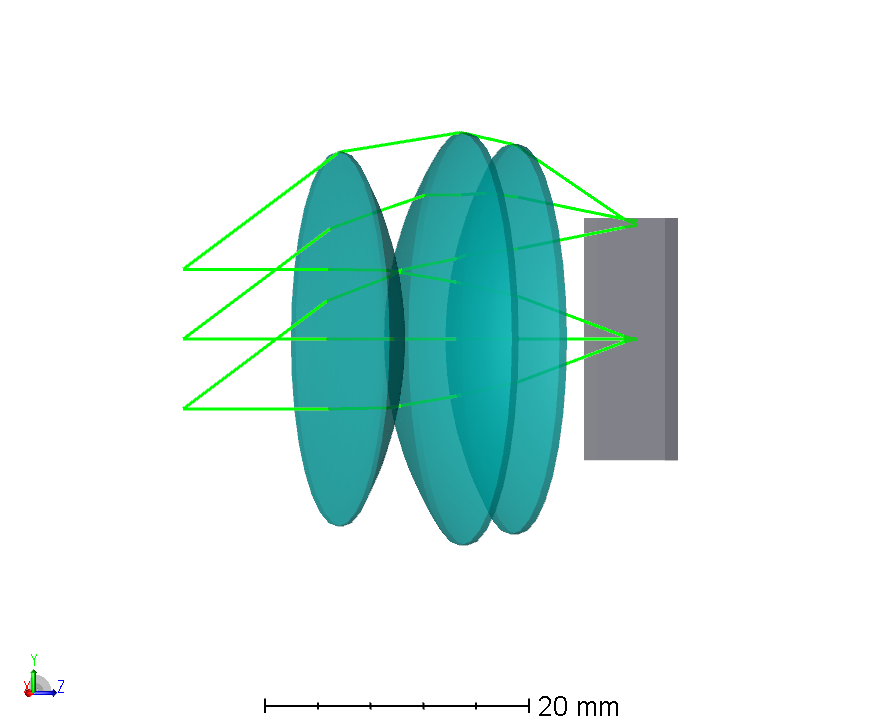

Fig. 2: Raytrace for a compound eyepiece and microdisplay. The higher etendue (larger ray bundles) collected from the display require more optical power than the AMOLED case. Multiple lens elements are needed to bend the topmost ray around toward the eye.

These challenges are not insurmountable. Introducing other surface types, such as Fresnel and reflective optics, can reduce the size and complexity of a VR eyepiece. Microdisplays may grow larger as fabrication capabilities improve, allowing for greater compromise between display size and lens complexity. With no obvious display choice existing, Optikos finds that the first phase in every VR product design must focus on specification development. Finding the right balance between optics, electronics, package size, and application requirements is the key to defining an appropriate architecture given available technologies.

Return to Anywhere Light Goes blog.

Written by Kevin Sweeney, Optikos Corporation